Restricted Boltzman Machine

Table of Contents:

- RBM Definition

- Marginal Conditional Distribution

- Conditional Distribution

- Maximum Likelihood Estimation

RBM Definition

A Restricted Boltzmann machine (RBM) is a generative neural network that can learn a probability distribution over its set of inputs. We can perform inference within an RBM.

Previously used in image processing and speech recognition (Mohamed and Hinton, 2010)

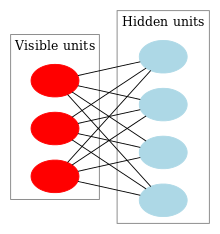

Concretely, an RBM is an undirected graphical model defined on variables \((\mathbf{v}, \mathbf{h}), \mathbf{v} \in \{0, 1\}^m\) and \(\mathbf{h} \in \{0, 1\}^n\). The joint probability is given by:

\[P(\mathbf{v}, \mathbf{h}) = \frac{1}{Z} exp(\phi(\mathbf{v}, \mathbf{h}))\]where

\[\phi(\mathbf{v}, \mathbf{h}) = −\alpha^T \mathbf{v} − \beta^T \mathbf{h} − \mathbf{v}^T W \mathbf{h}\]is a potential function. Here \(\alpha \in \mathbb{R}^m, \beta \in \mathbb{R}^n, W \in \mathbb{R}^{m \times n}\) and \(Z\) is the normalizing constant. We can interpret it as a fully connected bipartite network with two layers: one for visible variables \(\mathbf{v}\) and one for hidden variables \(\mathbf{h}\).

In image processing, the pixels could correspond to “visible” units of the RBM because their states are observed; the feature detectors correspond to “hidden” units [2].

Marginal conditional distribution of for a single hidden variable

Suppose we wish to compute \(P(\mathbf{h}_i \mid \mathbf{v})\) for a single hidden variable \(\mathbf{h}_i\).

\begin{equation} P(h_i \mid \mathbf{v} ) = \sum\limits_{h_1,\dots, h_{i-1},h_{i+1},\dots,h_n} p(h_1 \cap \dots \cap h_i \cap \dots \cap h_n \mid v_1 \cap \dots \cap v_n) \end{equation}

\begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{\sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} p(v,h)}{\sum\limits_{h_1,\dots,h_n}p(v,h)} \end{equation} By definition \(p(\mathbf{v},\mathbf{h})\) is \begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{\sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \frac{1}{Z} \exp(-\alpha^Tv - \beta^Th - v^TWh) }{\sum\limits_{h_1,\dots,h_n} \frac{1}{Z} \exp(-\alpha^Tv - \beta^Th - v^TWh) } \end{equation} Sums in exponents correspond to multiplication of the base as follows: \begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{\sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \frac{1}{Z} \exp(-\alpha^Tv) \exp(- \beta^Th) \exp(- v^TWh) }{\sum\limits_{h_1,\dots,h_n} \frac{1}{Z} \exp(-\alpha^Tv) \exp(- \beta^Th) \exp(- v^TWh) } \end{equation} Let us pull out the partition function and other terms that do not depend on \(h\): \begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{ \frac{1}{Z} \exp(-\alpha^Tv) \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}(- \beta^Th) \mbox{exp}(- v^TWh) }{\frac{1}{Z} \exp(-\alpha^Tv) \sum\limits_{h_1,\dots,h_n} \mbox{exp}(- \beta^Th) \exp(- v^TWh) } \end{equation}

Since we assume \(\alpha\) is known, and we are conditioning on the visible units \(\mathbf{v}\), we can treat these as constant with respect to the distribution. Then the expression \(\frac{1}{Z} \exp(-\alpha^Tv)\) is a scalar and cancels in the numerator and denominator. We are left with:

\begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{ \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \exp(- \beta^Th) \exp(- v^TWh) }{ \sum\limits_{h_1,\dots,h_n} \exp(- \beta^Th) \mbox{exp}(- v^TWh) } \end{equation} Expanding inner products and quadratic forms:

\begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{ \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \exp(- \sum\limits_{k=1}^{n}\beta_k h_k) \exp(- \sum\limits_{j=1}^{m} \sum\limits_{k=1}^{n} W_{jk}v_j h_k) }{ \sum\limits_{h_1,\dots,h_n} \exp(- \sum\limits_{k=1}^{n}\beta_k h_k) \exp(- \sum\limits_{j=1}^{m} \sum\limits_{k=1}^{n} W_{jk}v_j h_k) } \end{equation}

Let’s focus on factorizing the sums. We know the following properties of summation: \begin{equation} \sum\limits_{x_1}\sum\limits_{x_2}x_1x_2 = \sum\limits_{x_1}x_1 (\sum\limits_{x_2} x_2) = (\sum\limits_{x_1}x_1)(\sum\limits_{x_2}x_2) \end{equation}

Consider the expression \begin{equation} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}(- \sum\limits_{k=1}^{n}\beta_k h_k) \mbox{exp}(- \sum\limits_{j=1}^{m} \sum\limits_{k=1}^{n} W_{jk}v_j h_k) \end{equation} This can be written cleanly as \begin{equation} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}(- \sum\limits_{k=1}^{n}\beta_k h_k + - \sum\limits_{j=1}^{m} \sum\limits_{k=1}^{n} W_{jk}v_j h_k) \end{equation} The order of summation can be swapped in the RHS \begin{equation} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}( \sum\limits_{k=1}^{n}-\beta_k h_k + \sum\limits_{k=1}^{n}\sum\limits_{j=1}^{m} -W_{jk}v_j h_k) \end{equation} We can now distribute a sum: \begin{equation} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}( \sum\limits_{k=1}^{n} \Bigg[ -\beta_k h_k + \sum\limits_{j=1}^{m} W_{jk}v_j h_k\Bigg]) \end{equation} We can now distribute \(h_k\): We can now distribute a sum: \begin{equation} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}( \sum\limits_{k=1}^{n} - h_k \Bigg[\beta_k + \sum\limits_{j=1}^{m} W_{jk}v_j \Bigg]) \end{equation} Here is the crucial insight: the exponent of a sum is the product of the exponents of each term. Thus, we can decompose this expression into \(\begin{aligned} \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} \mbox{exp}( -h_1 \Bigg[\beta_1 + \sum\limits_{j=1}^{m} W_{j1}v_j \Bigg]) \cdot \dots \\ \mbox{exp}( -h_k \Bigg[\beta_k + \sum\limits_{j=1}^{m} W_{jk}v_j \Bigg]) \cdot \dots \\ \mbox{exp}( -h_n \Bigg[\beta_n + \sum\limits_{j=1}^{m} W_{jm}v_j \Bigg]) \end{aligned}\)

We can \(n-1\) sums from this \(\begin{aligned} \sum\limits_{h_1} \dots \sum\limits_{h_{i-1}} \sum\limits_{h_{i+1}} \dots \sum\limits_{h_n} \mbox{exp}( -h_1 \Bigg[\beta_1 + \sum\limits_{j=1}^{m} W_{j1}v_j \Bigg]) \cdot \dots \\ \mbox{exp}( -h_k \Bigg[\beta_k + \sum\limits_{j=1}^{m} W_{jk}v_j \Bigg]) \cdot \dots \\ \mbox{exp}( -h_n \Bigg[\beta_n + \sum\limits_{j=1}^{m} W_{jm}v_j \Bigg]) \end{aligned}\)

into this, where every variable is summed over \textbf{except for} \(\mathbf{i}\) in the numerator:

\[\begin{aligned} \mbox{exp}( -h_i \Bigg[\beta_i + \sum\limits_{j=1}^{m} W_{ji}v_j \Bigg]) \Bigg(\sum\limits_{h_1} \mbox{exp}( -h_1 \Bigg[\beta_1 + \sum\limits_{j=1}^{m} W_{j1}v_j \Bigg]) \Bigg)\cdot \dots \\ \dots \Bigg(\sum\limits_{h_{i-1}} \mbox{exp}( -h_{i-1} \Bigg[\beta_{i-1} + \sum\limits_{j=1}^{m} W_{j(k-1)}v_j \Bigg]) \Bigg) \cdot \dots \\ \Bigg(\sum\limits_{h_{i+1}} \Bigg(\mbox{exp}( -h_{i+1} \Bigg[\beta_{i+1} + \sum\limits_{j=1}^{m} W_{j(i+1)}v_j \Bigg]) \Bigg) \cdot \dots \\ \Bigg(\sum\limits_{h_n} \mbox{exp}( -h_n \Bigg[\beta_n + \sum\limits_{j=1}^{m} W_{jm}v_j \Bigg]) \Bigg) \end{aligned}\]Since we have one additional sum in the denominator than in the numerator: \begin{equation} p(h_i \mid v) = \frac{p(h_i,v)}{p(v)} = \frac{ \sum\limits_{h_1,\dots,h_{i-1},h_{i+1},\dots,h_n} (\cdot) }{ \sum\limits_{h_1,\dots,h_n} (\cdot) } \end{equation} We can see that most of the terms will cancel in the top and we will be left with :

\begin{equation} =\frac{ \mbox{exp}( -h_i \Bigg[\beta_i + \sum\limits_{j=1}^{m} W_{ji}v_j \Bigg])}{ \sum\limits_{h_i}\mbox{exp}( -h_i \Bigg[\beta_i + \sum\limits_{j=1}^{m} W_{ji}v_j \Bigg])} \end{equation}

We know that the hidden units are binary (\(h_j \in \{0,1\}\)), so we could equivalently write (just as Goodfellow, Bengio, and Courville do in \textit{Deep Learning, page 656}) \begin{equation} p(h_j=1 \mid \mathbf{v}) = \frac{ \tilde{P}(h_j=1 \mid \mathbf{v}) }{\tilde{P}(h_j=0 \mid \mathbf{v}) + \tilde{P}(h_j=1 \mid \mathbf{v}) } \end{equation}

\[= \frac{ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) }{ \mbox{exp}(0)+ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i})}\](the sum can be compactly written an inner product with the \(i\)‘th column of \(W\)): \begin{equation} p(h_i \mid \mathbf{v})= \sigma ( \beta_i + \sum\limits_{j=1}^{m}W_{ji}v_j) = \sigma( \beta_i + v^TW_{:,i} ) \end{equation}

Conditional Distribution

Suppose we wish to compute the conditional distribution \(P (\mathbf{h} \mid \mathbf{v})\).

In undirected graphical models, conditional independence is offered by graph separation. Thus, if we condition upon all of the visible nodes \(\mathbf{v}\), then \(h_i\) will be completed disconnected from all other nodes in the graph

\begin{equation} P( \mathbf{h} \mid \mathbf{v} ) = \prod\limits_{i=1}^{n} P(\mathbf{h}_i \mid \mathbf{v}) \end{equation} Thus, inference in the conditional distribution is efficient (but inference over other distributions in the RBM is not efficient)

\[P( \mathbf{h} \mid \mathbf{v} ) = \frac{ \prod\limits_i \Big( \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) \Big) }{ \prod\limits_i \Big(1+ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) \Big)}\]the product in the numerator reduces to summing in the exponents, as follows (we sum over the columns of \(\mathbf{W}\) because the dot product is linear, and the scalar is its own transpose \(v^T \mathbf{W} \mathbf{1} = \mathbf{1}^T \mathbf{W}^Tv\)):

\[P( \mathbf{h} \mid \mathbf{v} ) = \frac{ \mbox{exp}\Bigg( (\sum\limits_i \beta_i) + v^T (\sum\limits_i \mathbf{W}_{:,i}) \Bigg) }{ \prod\limits_i \Bigg(1+ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) \Bigg)} = \frac{ \mbox{exp}\Bigg( \mathbf{1}^T \beta + v^T \mathbf{W} \mathbf{1} \Bigg) }{ \prod\limits_i \Bigg(1+ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) \Bigg)} = \frac{ \mbox{exp}\Bigg( \mathbf{1}^T \beta + \mathbf{1}^T \mathbf{W}^Tv \Bigg) }{ \prod\limits_i \Bigg(1+ \mbox{exp}(\beta_i + v^T \mathbf{W}_{:,i}) \Bigg)}\]As Goodfellow, Bengio, and Courville demonstrate, this can also be written as \begin{equation} P( \mathbf{h} \mid \mathbf{v}) = \prod\limits_{j=1}^{n_h}\sigma \Bigg( (2h-1) \odot (\beta + \mathbf{W}^Tv) \Bigg) \end{equation}

Maximum Likelihood Estimation

Given a dataset of samples for the visible units \(\mathcal{D} = \{ \mathbf{v}^1, \cdots, \mathbf{v}^K \}\). We seek to learn the parameters of the RBM to maximize the likelihood \(\mathcal{L}\) of these samples. parameters that maximize the marginal likelihood of each sample

\[L(v) = \sum\limits_h P(h,v) = \sum\limits_h \frac{1}{Z} \exp(\phi(v,h)\]For a fixed v, can

Of course, the sum \(\sum\limits_h\) could be computed naively over all possible assignments, which happens to be \(2^n\) assignments because we have \(n\) hidden nodes, each of which is binary. This is obviously exponential in \(n\). We know a sum can be computed ``efficiently” if we can write it in factored form, e.g. \begin{equation} \sum\limits_{x_1}\sum\limits_{x_2}\sum\limits_{x_3}x_1x_2x_3 = (\sum\limits_{x_1}x_1) (\sum\limits_{x_2} x_2) (\sum\limits_{x_3} x_3) \end{equation}

Our solution to part (3A) clearly shows how this sum over \(\mathbf{h}\), \(\sum\limits_{\mathbf{h}}(\cdot)\), can be factorized and is tractable.

With a fixed h

The sum over \(\mathbf{v}\), \(\sum \limits_{\mathbf{v}}(\cdot)\), cannot be factorized. Thus, the sum cannot be computed efficiently.

Normalization Constant: Intractable

\[Z = \sum\limits_h \sum\limits_v \exp(\phi( \mathbf{v}, \mathbf{h}))\]This expression involves a sum over \(\mathbf{v}\), \(\sum \limits_{\mathbf{v}}\), which cannot be factorized. Thus, the sum cannot be computed efficiently.

With an intractable partition function, we turn to efficient MCMC sampling in the form of block Gibbs sampling for training. The intractable partition function \(Z\) implies that the normalized joint probability distribution \(P (\mathbf{v})\) is also intractable to evaluate, as it involves the term \(\frac{1}{Z}\).

References

[1.] Restricted Boltzmann machine. Wikipedia

[2.] Geoffrey Hinton. A Practical Guide to Training Restricted Boltzmann Machines. PDF